Team

Senior Academic Staff

Dr. Jonas Auda

- Room:

- S-M 203

- Phone:

- +49 201 18-32982

- Email:

- jonas.auda (at) uni-due.de

- Consultation Hour:

- by appointment

- Address:

- Universität Duisburg-Essen

Institut für Informatik und Wirtschaftsinformatik (ICB)

Mensch-Computer Interaktion

Schützenbahn 70

45127 Essen

Bio:

Fields of Research:

Jonas Auda on Google Scholar and ResearchGate

Publications:

- Auda, Jonas; Grünefeld, Uwe; Faltaous, Sarah; Mayer, Sven; Schneegass, Stefan: A Scoping Survey on Cross-reality Systems. In: ACM Computing Surveys. 2023. doi:10.1145/3616536CitationAbstractDetails

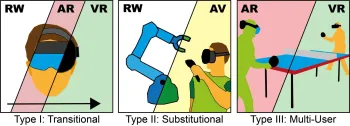

Immersive technologies such as Virtual Reality (VR) and Augmented Reality (AR) empower users to experience digital realities. Known as distinct technology classes, the lines between them are becoming increasingly blurry with recent technological advancements. New systems enable users to interact across technology classes or transition between them—referred to as cross-reality systems. Nevertheless, these systems are not well understood. Hence, in this article, we conducted a scoping literature review to classify and analyze cross-reality systems proposed in previous work. First, we define these systems by distinguishing three different types. Thereafter, we compile a literature corpus of 306 relevant publications, analyze the proposed systems, and present a comprehensive classification, including research topics, involved environments, and transition types. Based on the gathered literature, we extract nine guiding principles that can inform the development of cross-reality systems. We conclude with research challenges and opportunities.

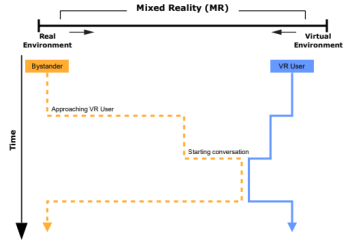

In the last decade, researchers contributed an increasing number of cross-reality systems and their evaluations. Going beyond individual technologies such as Virtual or Augmented Reality, these systems introduce novel approaches that help to solve relevant problems such as the integration of bystanders or physical objects. However, cross-reality systems are complex by nature, and describing the interactions and transitions taking place is a challenging task. Thus, in this paper, we propose the idea of the Actuality-Time Continuum that aims to enable researchers and designers alike to visualize complex cross-reality experiences. Moreover, we present four visualization examples that illustrate the potential of our proposal and conclude with an outlook on future perspectives.

- Liebers, Carina; Prochazka, Marvin; Pfützenreuter, Niklas; Liebers, Jonathan; Auda, Jonas; Gruenefeld, Uwe; Schneegass, Stefan: Pointing It out! Comparing Manual Segmentation of 3D Point Clouds between Desktop, Tablet, and Virtual Reality. In: International Journal of Human–Computer Interaction (2023), p. 1-15. doi:10.1080/10447318.2023.2238945PDFFull textCitationAbstractDetails

Scanning everyday objects with depth sensors is the state-of-the-art approach to generating point clouds for realistic 3D representations. However, the resulting point cloud data suffers from outliers and contains irrelevant data from neighboring objects. To obtain only the desired 3D representation, additional manual segmentation steps are required. In this paper, we compare three different technology classes as independent variables (desktop vs. tablet vs. virtual reality) in a within-subject user study (N = 18) to understand their effectiveness and efficiency for such segmentation tasks. We found that desktop and tablet still outperform virtual reality regarding task completion times, while we could not find a significant difference between them in the effectiveness of the segmentation. In the post hoc interviews, participants preferred the desktop due to its familiarity and temporal efficiency and virtual reality due to its given three-dimensional representation.

- Faltaous, Sarah; Prochazka, Marvin; Auda, Jonas; Keppel, Jonas; Wittig, Nick; Gruenefeld, Uwe; Schneegass, Stefan: Give Weight to VR: Manipulating Users’ Perception of Weight in Virtual Reality with Electric Muscle Stimulation, Association for Computing Machinery, New York, NY, USA 2022. (ISBN 9781450396905) doi:10.1145/3543758.3547571) CitationAbstractDetails

Virtual Reality (VR) devices empower users to experience virtual worlds through rich visual and auditory sensations. However, believable haptic feedback that communicates the physical properties of virtual objects, such as their weight, is still unsolved in VR. The current trend towards hand tracking-based interactions, neglecting the typical controllers, further amplifies this problem. Hence, in this work, we investigate the combination of passive haptics and electric muscle stimulation to manipulate users’ perception of weight, and thus, simulate objects with different weights. In a laboratory user study, we investigate four differing electrode placements, stimulating different muscles, to determine which muscle results in the most potent perception of weight with the highest comfort. We found that actuating the biceps brachii or the triceps brachii muscles increased the weight perception of the users. Our findings lay the foundation for future investigations on weight perception in VR.

- Grünefeld, Uwe; Auda, Jonas; Mathis, Florian; Schneegass, Stefan; Khamis, Mohamed; Gugenheimer, Jan; Mayer, Sven: VRception: Rapid Prototyping of Cross-Reality Systems in Virtual Reality. In: Proceedings of the 41st ACM Conference on Human Factors in Computing Systems (CHI). Association for Computing Machinery, New Orleans, United States 2022. doi:10.1145/3491102.3501821CitationAbstractDetails

Cross-reality systems empower users to transition along the realityvirtuality continuum or collaborate with others experiencing different manifestations of it. However, prototyping these systems is challenging, as it requires sophisticated technical skills, time, and often expensive hardware. We present VRception, a concept and toolkit for quick and easy prototyping of cross-reality systems. By simulating all levels of the reality-virtuality continuum entirely in Virtual Reality, our concept overcomes the asynchronicity of realities, eliminating technical obstacles. Our VRception Toolkit leverages this concept to allow rapid prototyping of cross-reality systems and easy remixing of elements from all continuum levels. We replicated six cross-reality papers using our toolkit and presented them to their authors. Interviews with them revealed that our toolkit sufficiently replicates their core functionalities and allows quick iterations. Additionally, remote participants used our toolkit in pairs to collaboratively implement prototypes in about eight minutes that they would have otherwise expected to take days.

- Auda, Jonas; Grünefeld, Uwe; Schneegass, Stefan: If The Map Fits! Exploring Minimaps as Distractors from Non-Euclidean Spaces in Virtual Reality. In: CHI 22. ACM, 2022. doi:10.1145/3491101.3519621CitationDetails

- Auda, Jonas; Grünefeld, Uwe; Kosch, Thomas; Schneegass, Stefan: The Butterfly Effect: Novel Opportunities for Steady-State Visually-Evoked Potential Stimuli in Virtual Reality. In: Researchgate (Ed.): Augmented Humans. Kashiwa, Chiba, Japan 2022. doi:DOI:10.1145/3519391.3519397CitationDetails

- Keppel, Jonas; Liebers, Jonathan; Auda, Jonas; Gruenefeld, Uwe; Schneegass, Stefan: ExplAInable Pixels: Investigating One-Pixel Attacks on Deep Learning Models with Explainable Visualizations. In: Proceedings of the 21st International Conference on Mobile and Ubiquitous Multimedia. Association for Computing Machinery, New York, NY, USA 2022, p. 231-242. doi:10.1145/3568444.3568469CitationAbstractDetails

Nowadays, deep learning models enable numerous safety-critical applications, such as biometric authentication, medical diagnosis support, and self-driving cars. However, previous studies have frequently demonstrated that these models are attackable through slight modifications of their inputs, so-called adversarial attacks. Hence, researchers proposed investigating examples of these attacks with explainable artificial intelligence to understand them better. In this line, we developed an expert tool to explore adversarial attacks and defenses against them. To demonstrate the capabilities of our visualization tool, we worked with the publicly available CIFAR-10 dataset and generated one-pixel attacks. After that, we conducted an online evaluation with 16 experts. We found that our tool is usable and practical, providing evidence that it can support understanding, explaining, and preventing adversarial examples.

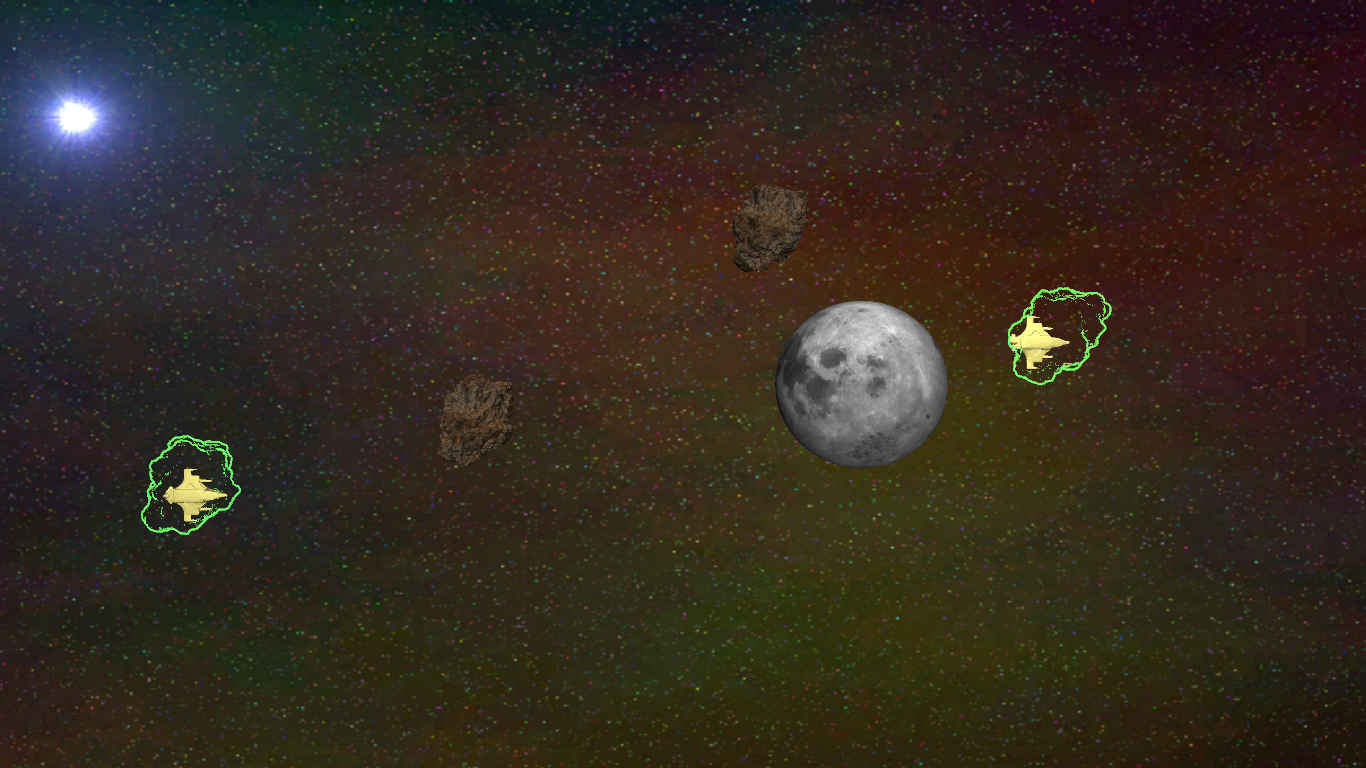

- Auda, Jonas; Mayer, Sven; Verheyen, Nils; Schneegass, Stefan: Flyables: Haptic Input Devices for Virtual Reality using Quadcopters. In: ResearchGate (Ed.): VRST. 2021. doi:10.1145/3489849.3489855CitationDetails

- Auda, Jonas; Grünefeld, Uwe; Pfeuffer, Ken; Rivu, Radiah; Florian, Alt; Schneegass, Stefan: I'm in Control! Transferring Object Ownership Between Remote Users with Haptic Props in Virtual Reality. In: Proceedings of the 9th ACM Symposium on Spatial User Interaction (SUI). Association for Computing Machinery, 2021. doi:10.1145/3485279.3485287CitationDetails

- Auda, Jonas; Grünefeld, Uwe; Schneegass, Stefan: Enabling Reusable Haptic Props for Virtual Reality by Hand Displacement. In: Proceedings of the Conference on Mensch Und Computer (MuC). Association for Computing Machinery, Ingolstadt, Germany 2021, p. 412-417. doi:10.1145/3473856.3474000CitationAbstractDetails

Virtual Reality (VR) enables compelling visual experiences. However, providing haptic feedback is still challenging. Previous work suggests utilizing haptic props to overcome such limitations and presents evidence that props could function as a single haptic proxy for several virtual objects. In this work, we displace users’ hands to account for virtual objects that are smaller or larger. Hence, the used haptic prop can represent several differently-sized virtual objects. We conducted a user study (N = 12) and presented our participants with two tasks during which we continuously handed them the same haptic prop but they saw in VR differently-sized virtual objects. In the first task, we used a linear hand displacement and increased the size of the virtual object to understand when participants perceive a mismatch. In the second task, we compare the linear displacement to logarithmic and exponential displacements. We found that participants, on average, do not perceive the size mismatch for virtual objects up to 50% larger than the physical prop. However, we did not find any differences between the explored different displacement. We conclude our work with future research directions.

- Auda, Jonas; Weigel, Martin; Cauchard, Jessica; Schneegass, Stefan: Understanding Drone Landing on the Human Body. In: ResearchGate (Ed.): 23rd International Conference on Mobile Human-Computer Interaction. 2021. doi:10.1145/3447526.3472031CitationDetails

- Auda, Jonas; Heger, Roman; Gruenefeld, Uwe; Schneegaß, Stefan: VRSketch: Investigating 2D Sketching in Virtual Reality with Different Levels of Hand and Pen Transparency. In: 18th International Conference on Human–Computer Interaction (INTERACT). Springer, Bari, Italy 2021, p. 195-211. doi:10.1007/978-3-030-85607-6_14CitationAbstractDetails

Sketching is a vital step in design processes. While analog sketching on pen and paper is the defacto standard, Virtual Reality (VR) seems promising for improving the sketching experience. It provides myriads of new opportunities to express creative ideas. In contrast to reality, possible drawbacks of pen and paper drawing can be tackled by altering the virtual environment. In this work, we investigate how hand and pen transparency impacts users’ 2D sketching abilities. We conducted a lab study (N=20N=20) investigating different combinations of hand and pen transparency. Our results show that a more transparent pen helps one sketch more quickly, while a transparent hand slows down. Further, we found that transparency improves sketching accuracy while drawing in the direction that is occupied by the user’s hand.

- Liebers, Jonathan; Abdelaziz, Mark; Mecke, Lukas; Saad, Alia; Auda, Jonas; Alt, Florian; Schneegaß, Stefan: Understanding User Identification in Virtual Reality Through Behavioral Biometrics and the Effect of Body Normalization. In: Proceedings of the 40th ACM Conference on Human Factors in Computing Systems (CHI). Association for Computing Machinery, Yokohama, Japan 2021. doi:10.1145/3411764.3445528CitationAbstractDetails

Virtual Reality (VR) is becoming increasingly popular both in the entertainment and professional domains. Behavioral biometrics have recently been investigated as a means to continuously and implicitly identify users in VR. Applications in VR can specifically benefit from this, for example, to adapt virtual environments and user interfaces as well as to authenticate users. In this work, we conduct a lab study (N = 16) to explore how accurately users can be identified during two task-driven scenarios based on their spatial movement. We show that an identification accuracy of up to 90% is possible across sessions recorded on different days. Moreover, we investigate the role of users’ physiology in behavioral biometrics by virtually altering and normalizing their body proportions. We find that body normalization in general increases the identification rate, in some cases by up to 38%; hence, it improves the performance of identification systems.

- Borsum, Florian; Pascher, Max; Auda, Jonas; Schneegass, Stefan; Lux, Gregor; Gerken, Jens: Stay on Course in VR: Comparing the Precision of Movement between Gamepad, Armswinger, and Treadmill: Kurs Halten in VR: Vergleich Der Bewegungspräzision von Gamepad, Armswinger Und Laufstall. In: Mensch Und Computer 2021. Association for Computing Machinery, New York, NY, USA 2021, p. 354-365. doi:10.1145/3473856.3473880CitationAbstractDetails

In diesem Beitrag wird untersucht, inwieweit verschiedene Formen von Fortbewegungstechniken in Virtual Reality Umgebungen Einfluss auf die Präzision bei der Interaktion haben. Dabei wurden insgesamt drei Techniken untersucht: Zwei der Techniken integrieren dabei eine körperliche Aktivität, um einen hohen Grad an Realismus in der Bewegung zu erzeugen (Armswinger, Laufstall). Als dritte Technik wurde ein Gamepad als Baseline herangezogen. In einer Studie mit 18 Proband:innen wurde die Präzision dieser drei Fortbewegungstechniken über sechs unterschiedliche Hindernisse in einem VR-Parcours untersucht. Die Ergebnisse zeigen, dass für einzelne Hindernisse, die zum einen eine Kombination aus Vorwärts- und Seitwärtsbewegung erfordern (Slalom, Klippe) sowie auf Geschwindigkeit abzielen (Schiene), der Laufstall eine signifikant präzisere Steuerung ermöglicht als der Armswinger. Auf den gesamten Parcours gesehen ist jedoch kein Eingabegerät signifikant präziser als ein anderes. Die Benutzung des Laufstalls benötigt zudem signifikant mehr Zeit als Gamepad und Armswinger. Ebenso zeigte sich, dass das Ziel, eine reale Laufbewegung 1:1 abzubilden, auch mit einem Laufstall nach wie vor nicht erreicht wird, die Bewegung aber dennoch als intuitiv und immersiv wahrgenommen wird.

- Borsum, Florian; Pascher, Max; Auda, Jonas; Schneegass, Stefan; Lux, Gregor; Gerken, Jens: Stay on Course in VR: Comparing the Precision of Movement between Gamepad, Armswinger, and Treadmill: Kurs Halten in VR: Vergleich Der Bewegungspräzision von Gamepad, Armswinger Und Laufstall, Association for Computing Machinery, New York, NY, USA 2021. (ISBN 9781450386456) doi:10.1145/3473856.3473880) CitationAbstractDetails

In diesem Beitrag wird untersucht, inwieweit verschiedene Formen von Fortbewegungstechniken in Virtual Reality Umgebungen Einfluss auf die Präzision bei der Interaktion haben. Dabei wurden insgesamt drei Techniken untersucht: Zwei der Techniken integrieren dabei eine körperliche Aktivität, um einen hohen Grad an Realismus in der Bewegung zu erzeugen (Armswinger, Laufstall). Als dritte Technik wurde ein Gamepad als Baseline herangezogen. In einer Studie mit 18 Proband:innen wurde die Präzision dieser drei Fortbewegungstechniken über sechs unterschiedliche Hindernisse in einem VR-Parcours untersucht. Die Ergebnisse zeigen, dass für einzelne Hindernisse, die zum einen eine Kombination aus Vorwärts- und Seitwärtsbewegung erfordern (Slalom, Klippe) sowie auf Geschwindigkeit abzielen (Schiene), der Laufstall eine signifikant präzisere Steuerung ermöglicht als der Armswinger. Auf den gesamten Parcours gesehen ist jedoch kein Eingabegerät signifikant präziser als ein anderes. Die Benutzung des Laufstalls beötigt zudem signifikant mehr Zeit als Gamepad und Armswinger. Ebenso zeigte sich, dass das Ziel, eine reale Laufbewegung 1:1 abzubilden, auch mit einem Laufstall nach wie vor nicht erreicht wird, die Bewegung aber dennoch als intuitiv und immersiv wahrgenommen wird.

- Auda, Jonas; Gruenefeld, Uwe; Mayer, Sven: It Takes Two To Tango: Conflicts Between Users on the Reality-Virtuality Continuum and Their Bystanders. In: Proceedings of the 14th ACM Interactive Surfaces and Spaces (ISS). Association for Computing Machinery, Lisbon, Portugal 2020. CitationAbstractDetails

Over the last years, Augmented and Virtual Reality technology became more immersive. However, when users immerse themselves in these digital realities, they detach from their real-world environments. This detachment creates conflicts that are problematic in public spaces such as planes but also private settings. Consequently, on the one hand, the detaching from the world creates an immerse experience for the user, and on the other hand, this creates a social conflict with bystanders. With this work, we highlight and categorize social conflicts caused by using immersive digital realities. We first present different social settings in which social conflicts arise and then provide an overview of investigated scenarios. Finally, we present research opportunities that help to address social conflicts between immersed users and bystanders.

- Schneegaß, Stefan; Auda, Jonas; Heger, Roman; Grünefeld, Uwe; Kosch, Thomas: EasyEG: A 3D-printable Brain-Computer Interface. In: Proceedings of the 33rd ACM Symposium on User Interface Software and Technology (UIST). Minnesota, USA 2020. doi:https://doi.org/10.1145/3379350.3416189CitationAbstractDetails

Brain-Computer Interfaces (BCIs) are progressively adopted by the consumer market, making them available for a variety of use-cases. However, off-the-shelf BCIs are limited in their adjustments towards individual head shapes, evaluation of scalp-electrode contact, and extension through additional sensors. This work presents EasyEG, a BCI headset that is adaptable to individual head shapes and offers adjustable electrode-scalp contact to improve measuring quality. EasyEG consists of 3D-printed and low-cost components that can be extended by additional sensing hardware, hence expanding the application domain of current BCIs. We conclude with use-cases that demonstrate the potentials of our EasyEG headset.

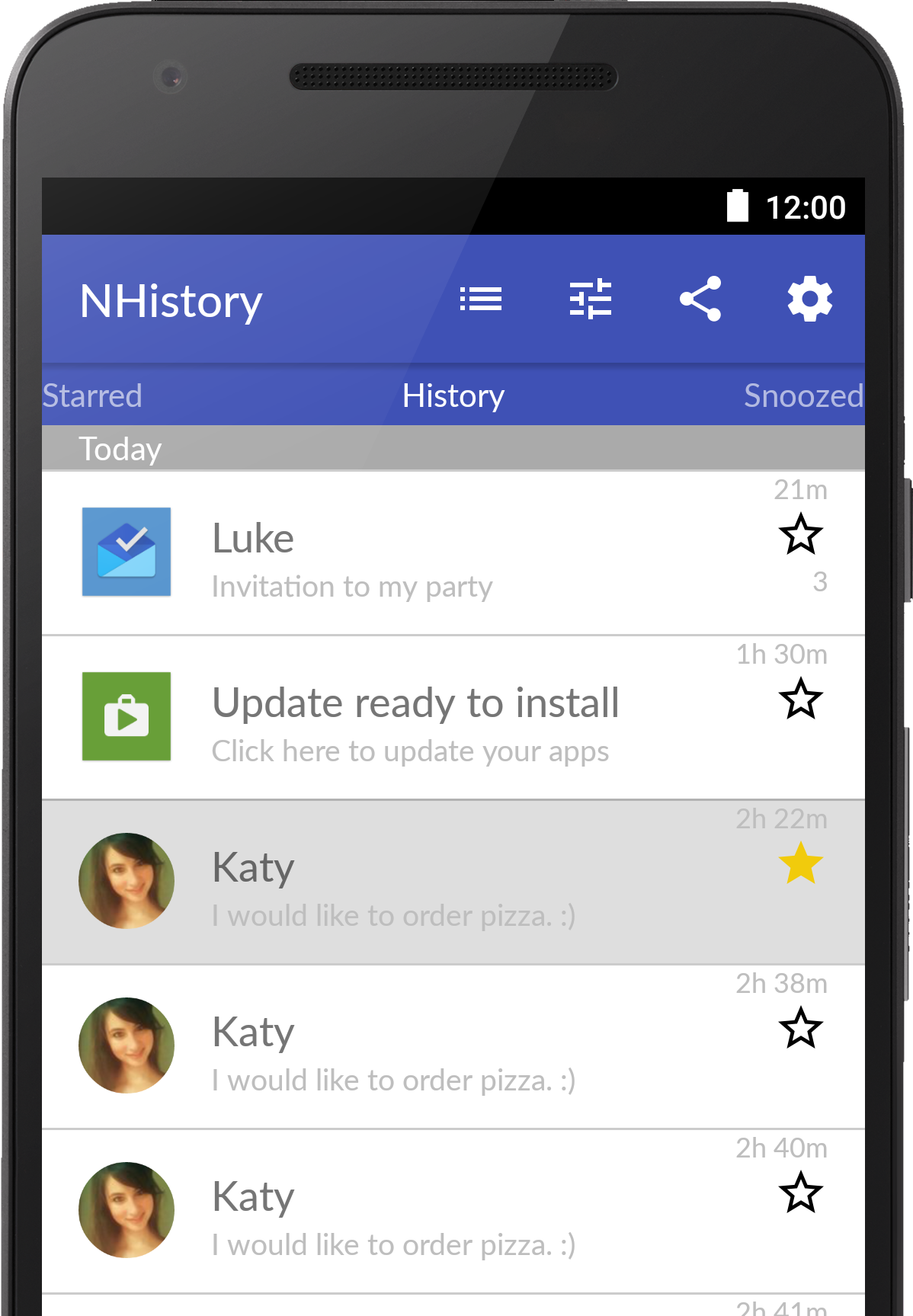

- Poguntke, Romina; Schneegass, Christina; van der Vekens, Lucas; Rzayev, Rufat; Auda, Jonas; Schneegass, Stefan; Schmidt:, Albrecht: NotiModes: an investigation of notification delay modes and their effects on smartphone usersy. In: MuC '20: Proceedings of the Conference on Mensch und Computer. ACM, 2020. doi:https://doi.org/10.1145/3404983.3410006CitationDetails

- Agarwal, Shivam; Auda, Jonas; Schneegaß, Stefan; Beck:, Fabian: A Design and Application Space for Visualizing User Sessions of Virtual and Mixed Reality Environments. In: VMV2020. ACM, 2020. doi:10.2312/vmv.20201194CitationDetails

- Poguntke, Romina; Schneegass, Christina; van der Vekens, Lucas; Rzayev, Rufat; Auda, Jonas; Schneegass, Stefan; Schmidt, Albrecht: NotiModes: an investigation of notification delay modes and their effects on smartphone users. In: MuC '20: Proceedings of the Conference on Mensch und Computer. ACM, Magdebug, Germany 2020. doi:10.1145/3404983.3410006CitationDetails

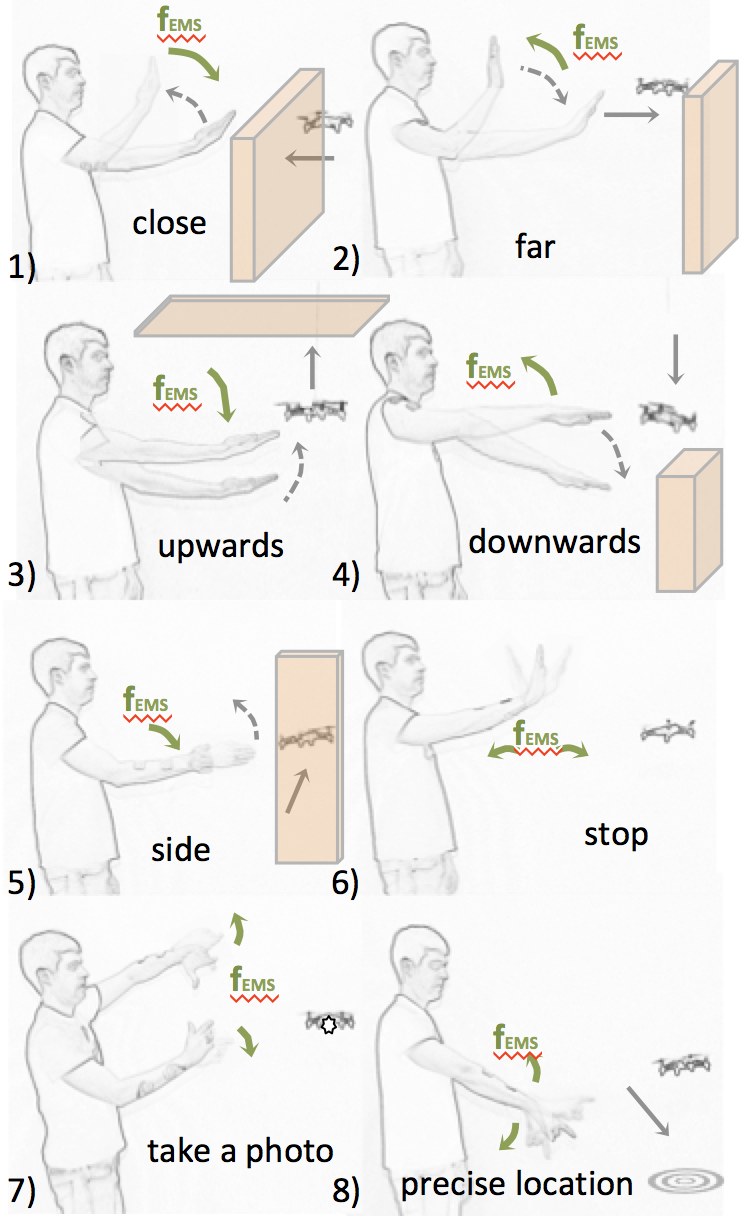

- Pfeiffer, Max; Medrano, Samuel Navas; Auda, Jonas; Schneegass, Stefan: STOP! Enhancing Drone Gesture Interaction with Force Feedback. In: CHI'19 Proceedings. HAL, Glasgow, UK 2019. doi:https://hal.archives-ouvertes.fr/hal-02128395/documentPDFFull textCitationDetails

- Jonas Auda, Max Pascher; Schneegass, Stefan: Around the (Virtual) World - Infinite Walking in Virtual Reality Using Electrical Muscle Stimulation. In: Acm (Ed.): CHI'19 Proceedings. Glasgow 2019. doi:https://doi.org/10.1145/3290605.3300661PDFCitationAbstractDetails

Virtual worlds are infinite environments in which the user can move around freely. When shifting from controller-based movement to regular walking as an input, the limitation of the real world also limits the virtual world. Tackling this challenge, we propose the use of electrical muscle stimulation to limit the necessary real-world space to create an unlimited walking experience. We thereby actuate the users‘ legs in a way that they deviate from their straight route and thus, walk in circles in the real world while still walking straight in the virtual world. We report on a study comparing this approach to vision shift – the state-of-the-art approach – as well as combining both approaches. The results show that particularly combining both approaches yield high potential to create an infinite walking experience.

- Antoun, Sara; Auda, Jonas; Schneegass, Stefan: SlidAR - Towards using AR in Education. In: MUM 2018: Proceedings of the 17th International Conference on Mobile and Ubiquitous Multimedia. ACM, Cairo, Egypt 2018. doi:https://doi.org/10.1145/3282894.3289744PDFCitationDetails

- Auda, Jonas; Hoppe, Matthias; Amiraslanov, Orkhan; Zhou, Bo; Knierim, Pascal; Schneegass, Stefan; Schmidt, Albrecht; Lukowicz, Paul: LYRA - smart wearable in-flight service assistant. In: ISWC '18: Proceedings of the 2018 ACM International Symposium on Wearable Computers. ACM, Singapore, Singapore 2018. doi:https://doi.org/10.1145/3267242.3267282PDFCitationDetails

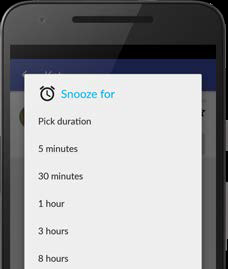

- Weber, Dominik; Voit, Alexandra; Auda, Jonas; Schneegass, Stefan; Henze, Niels: Snooze! - investigating the user-defined deferral of mobile notifications. In: MobileHCI '18: Proceedings of the 20th International Conference on Human-Computer Interaction with Mobile Devices and Services. ACM, Barcelona, Spain 2018. doi:https://doi.org/10.1145/3229434.3229436PDFCitationDetails

- Auda, Jonas; Weber, Dominik; Voit, Alexandra; Schneegass, Stefan: Understanding User Preferences towards Rule-based Notification Deferral. In: CHI EA '18: Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems. ACM, Montreal, Canada 2018. doi:https://doi.org/10.1145/3170427.3188688PDFCitationDetails

- Alt, Florian; Schneegass, Stefan; Auda, Jonas; Rzayev, Rufat; Broy, Nora: Using eye-tracking to support interaction with layered 3D interfaces on stereoscopic displays. In: IUI '14: Proceedings of the 19th international conference on Intelligent User Interfaces. ACM, Haifa, Israel 2014. doi:https://doi.org/10.1145/2557500.2557518PDFCitationDetails