Team

Team:

Wissenschaftlicher Mitarbeiter

Jonathan Liebers, M.Sc.

- Raum:

- S-M 204

- Telefon:

- +49 201 18-34936

- E-Mail:

- jonathan.liebers (at) uni-due.de

- Sprechstunde:

- nach Vereinbarung

- Adresse:

- Universität Duisburg-Essen

Institut für Informatik und Wirtschaftsinformatik (ICB)

Mensch-Computer Interaktion

Schützenbahn 70

45127 Essen

Publikationen:

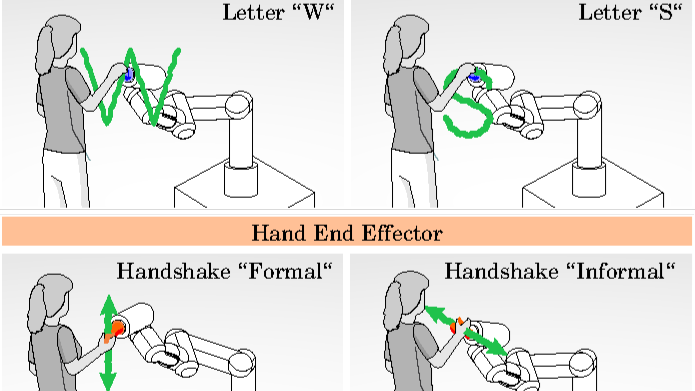

- Pascher, Max; Saad, Alia; Liebers, Jonathan; Heger, Roman; Gerken, Jens; Schneegass, Stefan; Gruenefeld, Uwe: Hands-On Robotics: Enabling Communication Through Direct Gesture Control. In: Companion of the 2024 ACM/IEEE International Conference on Human-Robot Interaction (HRI '24 Companion), March 11--14, 2024, Boulder, CO, USA. ACM, Boulder, Colorado, USA 2024. doi:10.1145/3610978.3640635PDFBIB DownloadKurzfassungDetails

Effective Human-Robot Interaction (HRI) is fundamental to seamlessly integrating robotic systems into our daily lives. However, current communication modes require additional technological interfaces, which can be cumbersome and indirect. This paper presents a novel approach, using direct motion-based communication by moving a robot's end effector. Our strategy enables users to communicate with a robot by using four distinct gestures -- two handshakes ('formal' and 'informal') and two letters ('W' and 'S'). As a proof-of-concept, we conducted a user study with 16 participants, capturing subjective experience ratings and objective data for training machine learning classifiers. Our findings show that the four different gestures performed by moving the robot's end effector can be distinguished with close to 100% accuracy. Our research offers implications for the design of future HRI interfaces, suggesting that motion-based interaction can empower human operators to communicate directly with robots, removing the necessity for additional hardware.

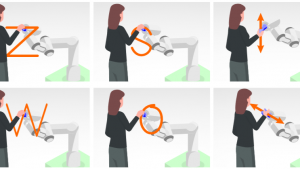

- Saad, Alia; Pascher, Max; Kassem, Khaled; Heger, Roman; Liebers, Jonathan; Schneegass, Stefan; Gruenefeld, Uwe: Hand-in-Hand: Investigating Mechanical Tracking for User Identification in Cobot Interaction. In: Proceedings of International Conference on Mobile and Ubiquitous Multimedia (MUM). Vienna, Austria 2023. doi:10.1145/3626705.3627771PDFBIB DownloadKurzfassungDetails

Robots play a vital role in modern automation, with applications in manufacturing and healthcare. Collaborative robots integrate human and robot movements. Therefore, it is essential to ensure that interactions involve qualified, and thus identified, individuals. This study delves into a new approach: identifying individuals through robot arm movements. Different from previous methods, users guide the robot, and the robot senses the movements via joint sensors. We asked 18 participants to perform six gestures, revealing the potential use as unique behavioral traits or biometrics, achieving F1-score up to 0.87, which suggests direct robot interactions as a promising avenue for implicit and explicit user identification.

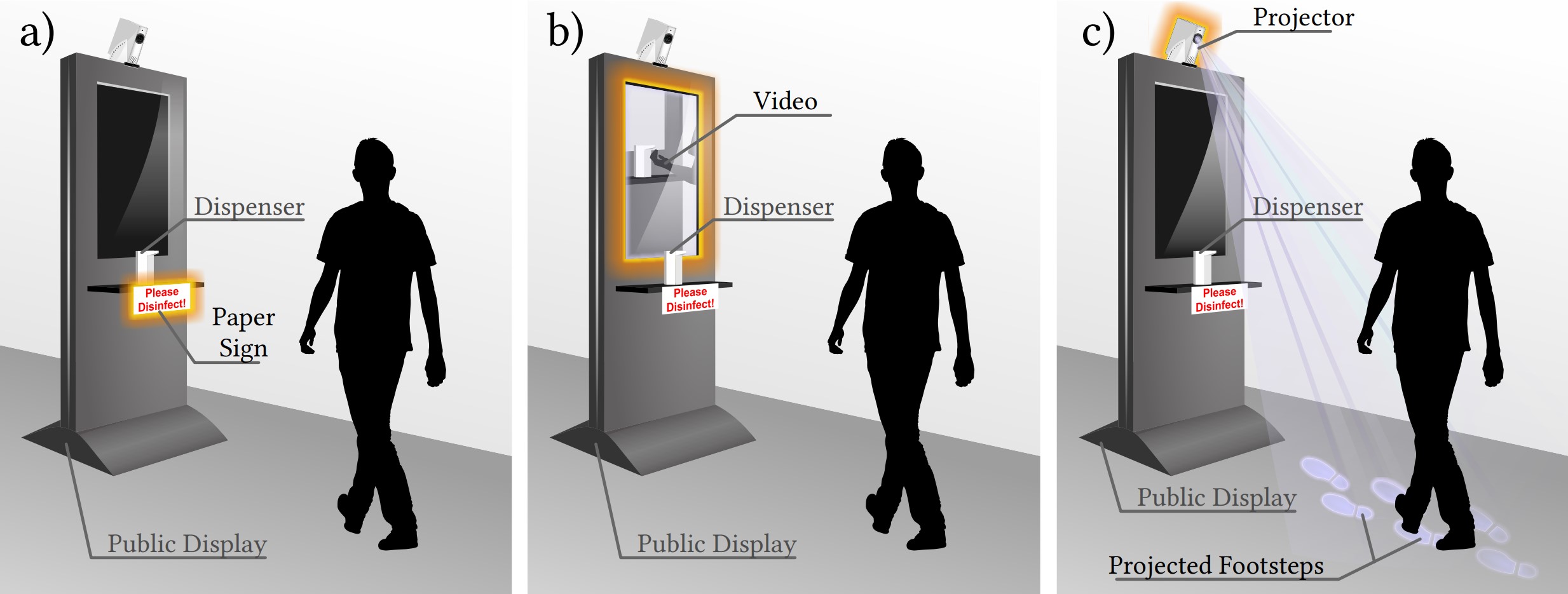

- Keppel, Jonas; Strauss, Marvin; Faltaous, Sarah; Liebers, Jonathan; Heger, Roman; Gruenefeld, Uwe; Schneegass, Stefan: Don't Forget to Disinfect: Understanding Technology-Supported Hand Disinfection Stations. In: Proc. ACM Hum.-Comput. Interact., Jg. 7 (2023). doi:10.1145/3604251PDFBIB DownloadKurzfassungDetails

The global COVID-19 pandemic created a constant need for hand disinfection. While it is still essential, disinfection use is declining with the decrease in perceived personal risk (e.g., as a result of vaccination). Thus this work explores using different visual cues to act as reminders for hand disinfection. We investigated different public display designs using (1) paper-based only, adding (2) screen-based, or (3) projection-based visual cues. To gain insights into these designs, we conducted semi-structured interviews with passersby (N=30). Our results show that the screen- and projection-based conditions were perceived as more engaging. Furthermore, we conclude that the disinfection process consists of four steps that can be supported: drawing attention to the disinfection station, supporting the (subconscious) understanding of the interaction, motivating hand disinfection, and performing the action itself. We conclude with design implications for technology-supported disinfection.

- Liebers, Carina; Prochazka, Marvin; Pfützenreuter, Niklas; Liebers, Jonathan; Auda, Jonas; Gruenefeld, Uwe; Schneegass, Stefan: Pointing It out! Comparing Manual Segmentation of 3D Point Clouds between Desktop, Tablet, and Virtual Reality. In: International Journal of Human–Computer Interaction (2023), S. 1-15. doi:10.1080/10447318.2023.2238945PDFVolltextBIB DownloadKurzfassungDetails

Scanning everyday objects with depth sensors is the state-of-the-art approach to generating point clouds for realistic 3D representations. However, the resulting point cloud data suffers from outliers and contains irrelevant data from neighboring objects. To obtain only the desired 3D representation, additional manual segmentation steps are required. In this paper, we compare three different technology classes as independent variables (desktop vs. tablet vs. virtual reality) in a within-subject user study (N = 18) to understand their effectiveness and efficiency for such segmentation tasks. We found that desktop and tablet still outperform virtual reality regarding task completion times, while we could not find a significant difference between them in the effectiveness of the segmentation. In the post hoc interviews, participants preferred the desktop due to its familiarity and temporal efficiency and virtual reality due to its given three-dimensional representation.

- Grünefeld, Uwe; Geilen, Alexander; Liebers, Jonathan; Wittig, Nick; Koelle, Marion; Schneegass, Stefan: ARm Haptics: 3D-Printed Wearable Haptics for Mobile Augmented Reality. In: Proc. ACM Hum.-Comput. Interact., Jg. 6 (2022). doi:10.1145/3546728BIB DownloadKurzfassungDetails

Augmented Reality (AR) technology enables users to superpose virtual content onto their environments. However, interacting with virtual content while mobile often requires users to perform interactions in mid-air, resulting in a lack of haptic feedback. Hence, in this work, we present the ARm Haptics system, which is worn on the user's forearm and provides 3D-printed input modules, each representing well-known interaction components such as buttons, sliders, and rotary knobs. These modules can be changed quickly, thus allowing users to adapt them to their current use case. After an iterative development of our system, which involved a focus group with HCI researchers, we conducted a user study to compare the ARm Haptics system to hand-tracking-based interaction in mid-air (baseline). Our findings show that using our system results in significantly lower error rates for slider and rotary input. Moreover, use of the ARm Haptics system results in significantly higher pragmatic quality and lower effort, frustration, and physical demand. Following our findings, we discuss opportunities for haptics worn on the forearm.

- Abdrabou, Yasmeen; Rivu, Radiah; Ammar, Tarek; Liebers, Jonathan; Saad, Alia; Liebers, Carina; Gruenefeld, Uwe; Knierim, Pascal; Khamis, Mohamed; Mäkelä, Ville; Schneegass, Stefan; Alt, Florian: Understanding Shoulder Surfer Behavior Using Virtual Reality. In: Proceedings of the IEEE conference on Virtual Reality and 3D User Interfaces (IEEE VR). IEEE, Christchurch, New Zealand 2022. BIB DownloadKurzfassungDetails

We explore how attackers behave during shoulder surfing. Unfortunately, such behavior is challenging to study as it is often opportunistic and can occur wherever potential attackers can observe other people’s private screens. Therefore, we investigate shoulder surfing using virtual reality (VR). We recruited 24 participants and observed their behavior in two virtual waiting scenarios: at a bus stop and in an open office space. In both scenarios, avatars interacted with private screens displaying different content, thus providing opportunities for shoulder surfing. From the results, we derive an understanding of factors influencing shoulder surfing behavior.

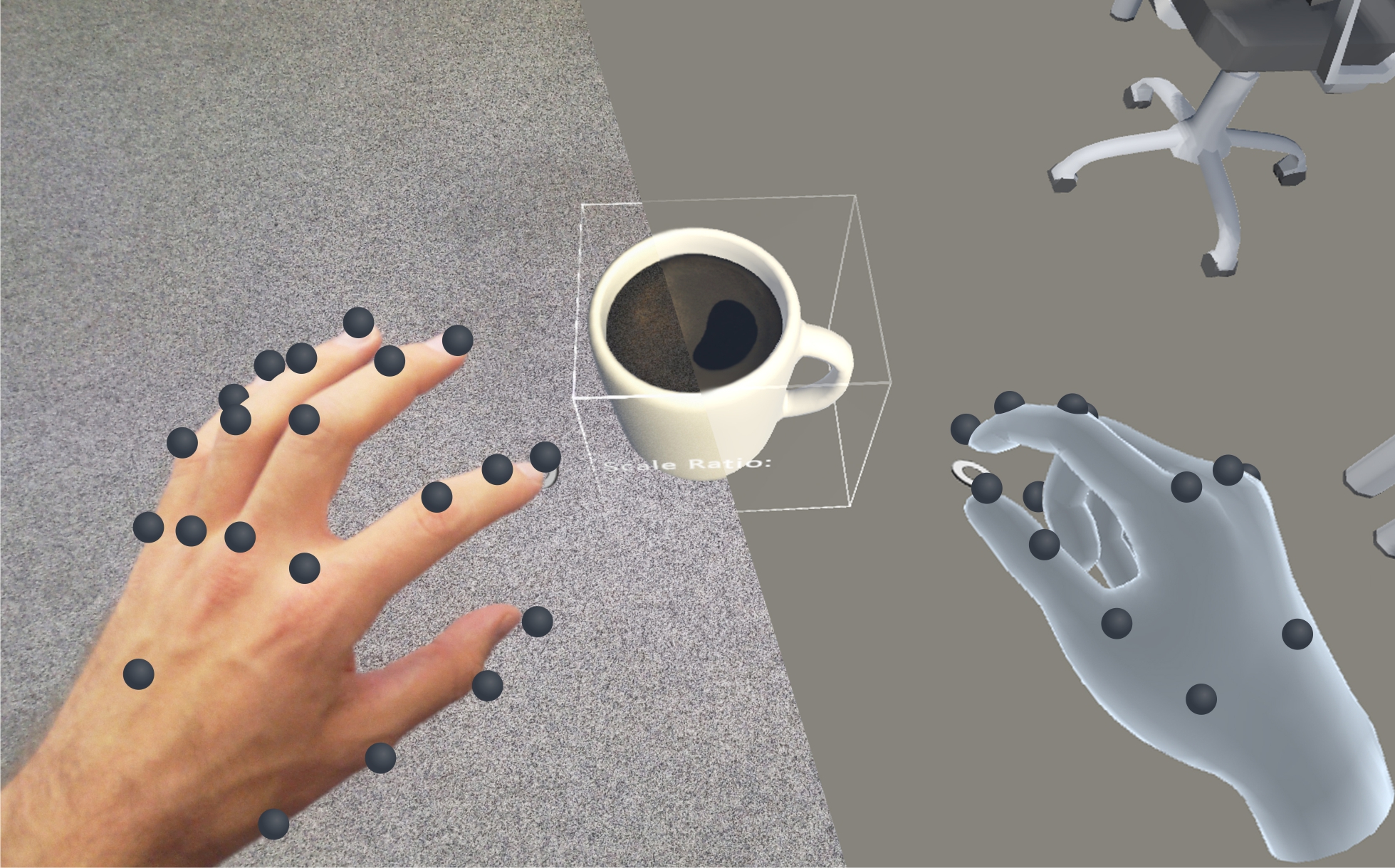

- Liebers, Jonathan; Brockel, Sascha; Gruenefeld, Uwe; Schneegass, Stefan: Identifying Users by Their Hand Tracking Data in Augmented and Virtual Reality. In: International Journal of Human–Computer Interaction (2022). doi:10.1080/10447318.2022.2120845PDFBIB DownloadKurzfassungDetails

Nowadays, Augmented and Virtual Reality devices are widely available and are often shared among users due to their high cost. Thus, distinguishing users to offer personalized experiences is essential. However, currently used explicit user authentication(e.g., entering a password) is tedious and vulnerable to attack. Therefore, this work investigates the feasibility of implicitly identifying users by their hand tracking data. In particular, we identify users by their uni- and bimanual finger behavior gathered from their interaction with eight different universal interface elements, such as buttons and sliders. In two sessions, we recorded the tracking data of 16 participants while they interacted with various interface elements in Augmented and Virtual Reality. We found that user identification is possible with up to 95 % accuracy across sessions using an explainable machine learning approach. We conclude our work by discussing differences between interface elements, and feature importance to provide implications for behavioral biometric systems.

- Keppel, Jonas; Liebers, Jonathan; Auda, Jonas; Gruenefeld, Uwe; Schneegass, Stefan: ExplAInable Pixels: Investigating One-Pixel Attacks on Deep Learning Models with Explainable Visualizations. In: Proceedings of the 21st International Conference on Mobile and Ubiquitous Multimedia. Association for Computing Machinery, New York, NY, USA 2022, S. 231-242. doi:10.1145/3568444.3568469BIB DownloadKurzfassungDetails

Nowadays, deep learning models enable numerous safety-critical applications, such as biometric authentication, medical diagnosis support, and self-driving cars. However, previous studies have frequently demonstrated that these models are attackable through slight modifications of their inputs, so-called adversarial attacks. Hence, researchers proposed investigating examples of these attacks with explainable artificial intelligence to understand them better. In this line, we developed an expert tool to explore adversarial attacks and defenses against them. To demonstrate the capabilities of our visualization tool, we worked with the publicly available CIFAR-10 dataset and generated one-pixel attacks. After that, we conducted an online evaluation with 16 experts. We found that our tool is usable and practical, providing evidence that it can support understanding, explaining, and preventing adversarial examples.

- Liebers, Jonathan; Horn, Patrick; Burschik, Christian; Gruenefeld, Uwe; Schneegass, Stefan: Using Gaze Behavior and Head Orientation for Implicit Identification in Virtual Reality. In: Proceedings of the 27th ACM Symposium on Virtual Reality Software and Technology (VRST). Association for Computing Machinery, Osaka, Japan 2021. doi:10.1145/3489849.3489880BIB DownloadKurzfassungDetails

Identifying users of a Virtual Reality (VR) headset provides designers of VR content with the opportunity to adapt the user interface, set user-specific preferences, or adjust the level of difficulty either for games or training applications. While most identification methods currently rely on explicit input, implicit user identification is less disruptive and does not impact the immersion of the users. In this work, we introduce a biometric identification system that employs the user’s gaze behavior as a unique, individual characteristic. In particular, we focus on the user’s gaze behavior and head orientation while following a moving stimulus. We verify our approach in a user study. A hybrid post-hoc analysis results in an identification accuracy of up to 75% for an explainable machine learning algorithm and up to 100% for a deep learning approach. We conclude with discussing application scenarios in which our approach can be used to implicitly identify users.

- Saad, Alia; Liebers, Jonathan; Gruenefeld, Uwe; Alt, Florian; Schneegass, Stefan: Understanding Bystanders’ Tendency to Shoulder Surf Smartphones Using 360-Degree Videos in Virtual Reality. In: Proceedings of the 23rd International Conference on Mobile Human-Computer Interaction (MobileHCI). Association for Computing Machinery, Toulouse, France 2021. doi:10.1145/3447526.3472058BIB DownloadKurzfassungDetails

Shoulder surfing is an omnipresent risk for smartphone users. However, investigating these attacks in the wild is difficult because of either privacy concerns, lack of consent, or the fact that asking for consent would influence people’s behavior (e.g., they could try to avoid looking at smartphones). Thus, we propose utilizing 360-degree videos in Virtual Reality (VR), recorded in staged real-life situations on public transport. Despite differences between perceiving videos in VR and experiencing real-world situations, we believe this approach to allow novel insights on observers’ tendency to shoulder surf another person’s phone authentication and interaction to be gained. By conducting a study (N=16), we demonstrate that a better understanding of shoulder surfers’ behavior can be obtained by analyzing gaze data during video watching and comparing it to post-hoc interview responses. On average, participants looked at the phone for about 11% of the time it was visible and could remember half of the applications used.

- Liebers, Jonathan; Abdelaziz, Mark; Mecke, Lukas; Saad, Alia; Auda, Jonas; Alt, Florian; Schneegaß, Stefan: Understanding User Identification in Virtual Reality Through Behavioral Biometrics and the Effect of Body Normalization. In: Proceedings of the 40th ACM Conference on Human Factors in Computing Systems (CHI). Association for Computing Machinery, Yokohama, Japan 2021. doi:10.1145/3411764.3445528BIB DownloadKurzfassungDetails

Virtual Reality (VR) is becoming increasingly popular both in the entertainment and professional domains. Behavioral biometrics have recently been investigated as a means to continuously and implicitly identify users in VR. Applications in VR can specifically benefit from this, for example, to adapt virtual environments and user interfaces as well as to authenticate users. In this work, we conduct a lab study (N = 16) to explore how accurately users can be identified during two task-driven scenarios based on their spatial movement. We show that an identification accuracy of up to 90% is possible across sessions recorded on different days. Moreover, we investigate the role of users’ physiology in behavioral biometrics by virtually altering and normalizing their body proportions. We find that body normalization in general increases the identification rate, in some cases by up to 38%; hence, it improves the performance of identification systems.

- Jonathan Liebers, Stefan Schneegass: Gaze-based Authentication in Virtual Reality. In: ETRA. ACM, 2020. doi:10.1145/3379157.3391421BIB DownloadDetails

- Jonathan Liebers, Stefan Schneegass: Introducing Functional Biometrics: Using Body-Reflections as a Novel Class of Biometric Authentication Systems. In: CHI Extended Abstracts 2020. ACM, Honolulu, HI, USA 2020. doi:10.1145/3334480.3383059BIB DownloadDetails

- Sarah Faltaous, Jonathan Liebers; Yomna Abdelrahman, Florian Alt; Schneegass, Stefan: VPID: Towards Vein Pattern Identification Using Thermal Imaging. In: i-com (2019) Nr. 18 (3), S. 259-270. doi:10.1515/icom-2019-0009PDFBIB DownloadKurzfassungDetails

Biometric authentication received considerable attention lately. The vein pattern on the back of the hand is a unique biometric that can be measured through thermal imaging. Detecting this pattern provides an implicit approach that can authenticate users while interacting. In this paper, we present the Vein-Identification system, called VPID. It consists of a vein pattern recognition pipeline and an authentication part. We implemented six different vein-based authentication approaches by combining thermal imaging and computer vision algorithms. Through a study, we show that the approaches achieve a low false-acceptance rate (“FAR”) and a low false-rejection rate (“FRR”). Our findings show that the best approach is the Hausdorff distance-difference applied in combination with a Convolutional Neural Networks (CNN) classification of stacked images.